Our customers are a testimonial to the flexible configuration and reliable performance of InterSense’s motion tracking systems. Through these customers, we support a broad array of applications from simulation & training to creation of the next Hollywood 3D blockbuster.

CASE STUDIES

F/A-18C Distributed Mission Training

Based on the success of the IS-900 technology deployed with the reconfigurable, rotary wing simul

AVCATT – Aviation Combined Arms Tactical Trainer

AVCATT provides six man modules, re-configurable to any combination of attack, reconnaissance, li

ExpeditionDI® Embedded Training for Soldiers

Based on technology developed by CG2® and Quantum3D® for U.S. Army Research Development and Engin

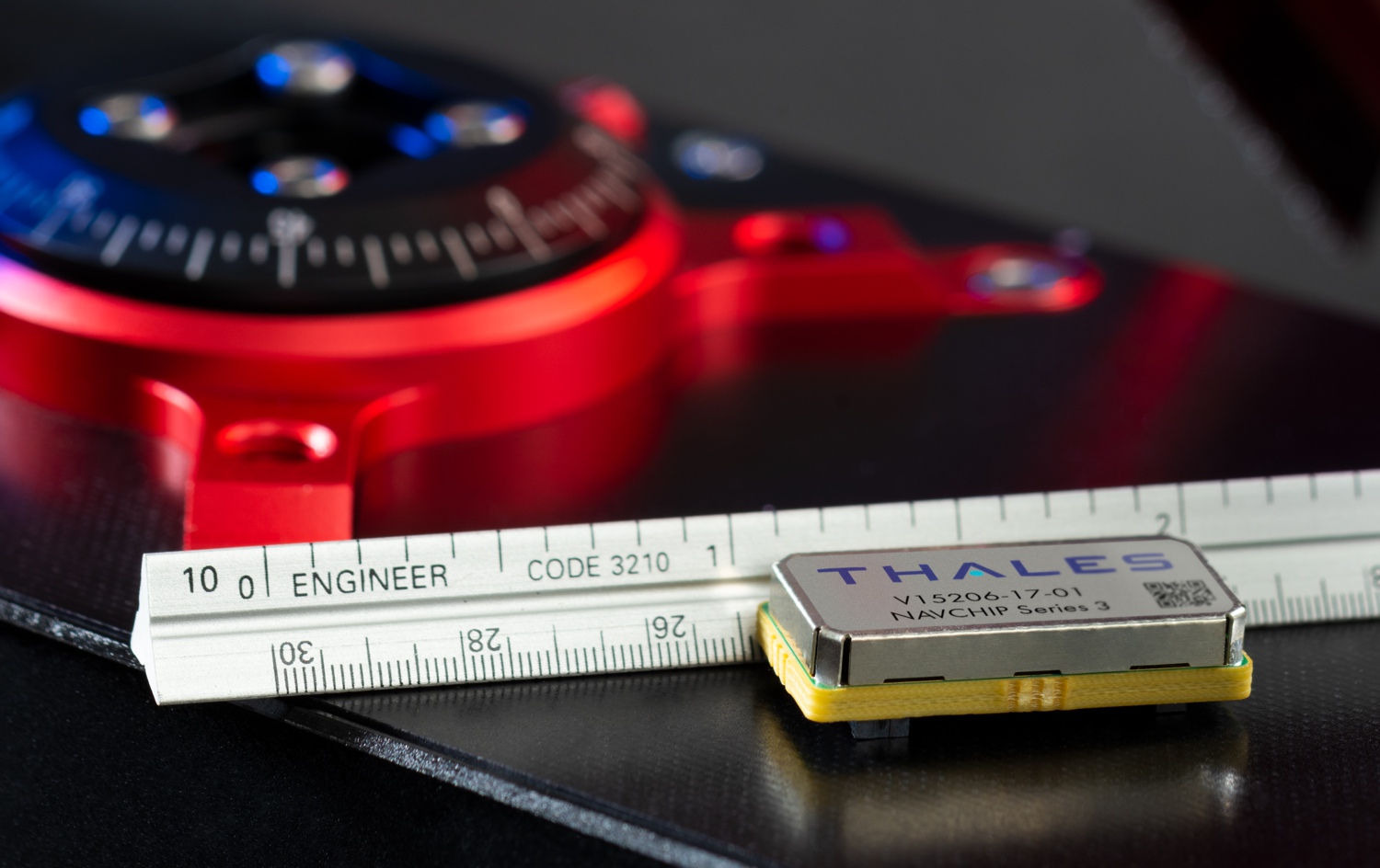

Thales Visionix is a leading developer of innovative inertial sensors and tracking systems for the military, industrial and R&D markets.

Corporate Responsibility

Our vision is to implement a corporate responsibility program that is sustainable in the long-term and has a positive impact on everyone.

Executive Management

Meet our Executive management staff who promote ethical behavior, support diversity, and provide rewarding work in a safe environment.

Environment & Community

Our commitment to our community extends to the environments sustainability. We are a proud member of the Maryland Green Registry.

Frequently Asked Questions

We receive a lot of questions, here are a few of the common ones, along with answers . Contact us if you have questions that have not been covered.

Software Partners

Our software solution partners integrate InterSense’s SDK into their applications to provide seamless tracking interaction with a variety of simulation, design, immersive and entertainment applications. Some software solutions listed below are “InterSense enabled” with middleware tracking libraries provided by software partners. Click here to view a list of more Software Partners

System Integrators and VARs

Our systems integrators, value added resellers, and OEM hardware partners provide different levels of system and component integration using InterSense tracking technology to meet specific market needs. Click here to view a list of more System Integrators and VAR’s